Drug Development Life Cycle

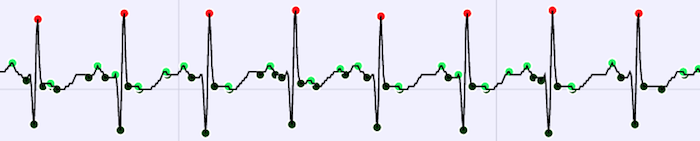

Epidemiological Models

Infectious Disease Transmission Network Modelling with Julia

Gillespie.jl. For a reference on Gillespie methods check out this excellent review by Des Higham.

SEIR model by Dr. Chris Rackauckas from the lab

Scientific Machine Learning

See some results on COVID-19 data in this presentation on Physics-informed machine learning with language-wide automatic differentiation.

SciML: An Open Source Software Organization for Scientific Machine Learning

Timely MIT Class (Spring 2020) (6s083/18s190) Computational Thinking with Applications to Covid-19

With thanks to MIT's Schwarzman College of Computing, Dept of EECS, and Dept of Mathematics.

Julia is a programming language created by Jeff Bezanson, Alan Edelman, Stefan Karpinski, and Viral B. Shah in 2009, released publicly in 2012, Julia now has over ten million downloads.

News flash: Wilkinson Prize for Julia! (MIT News December 26, 2018 )

News flash from Google's Jeff Dean: Julia + TPUs = fast and easily expressible Machine Learning Computations. (tweet October 23, 2018)

The Julia lab embraces openness and the solving of human problems. Today, the Julia Lab collaborates with a variety of researchers on real-world problems and applications, while simultaneously working on the core language and its ecosystem.

Publications

Conferences

Jarrett Revels, "Automatic Differentiation in the Julia Language." SIAM Manchester Julia Workshop 2016.

Jarrett Revels, "Automatic Differentiation in the Julia Language." AD2016 - 7th International Conference on Algorithmic Differentiation.

Jarrett Revels, "ForwardDiff.jl: Fast Derivatives Made Easy." JuliaCon 2016.

Past Research

The Julia Lab specializes in collaborating with other groups to solve messy real-world computational problems.

Statistical genomics

Existing bioinformatics tools aren't performant enough to handle the exabytes of data produced by modern genomics research each year, and general purpose linear algebra libraries are not optimized to take advantage of this data's inherent structure. To address this problem, the Julia Lab is developing specialized algorithms for principal component analysis and statistical fitting that will enable genomics researchers to analyze data at the same rapid pace that it is produced.

This project is an exciting interdisciplinary collaboration with Dr. Stavros Papadopoulos (Senior Research Scientist at Intel Labs) and Prof. Nikolaos Patsopoulos (Assistant Professor at Brigham and Women's Hospital, the Broad Institute and Harvard Medical School).

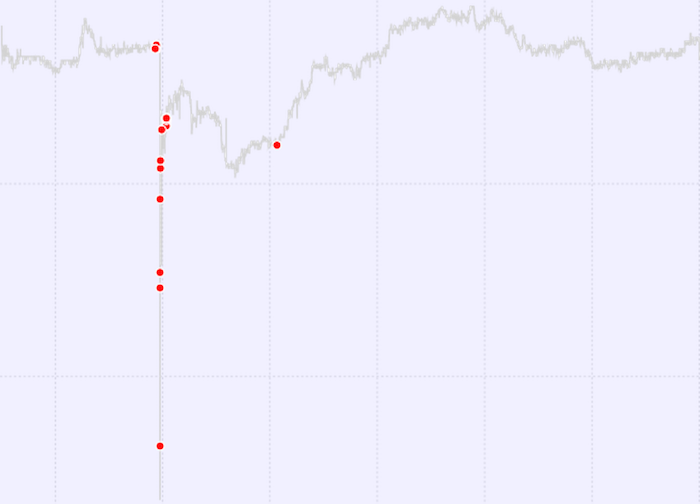

Financial Fraud Detection

A single stock exchange generates high-frequency trading (HFT) data at a rate of ~2.2 terabytes per month. Automatic identification of suspicious financial transactions in these high-throughput HFT data streams is an active area of research. The Julia Lab contributes to the battle against financial fraud by designing out-of-core analytics for anomaly detection.

Medical Data Analytics

Hospitals, like many large organizations, collect much more data than can be usefully processed and analyzed by human experts using today's available software. Oftentimes, these small-scale analyses can overlook statistical clues that might have rendered substantial improvements to patient care.

In collaboration with Harvard Medical School, the Julia Lab has worked on tools for rapidly identifying potential indicators of irregularities in medical data, equipping doctors and healthcare providers with the analytics they need to make informed medical decisions.

Numerical Linear Algebra and Parallel Computing

The Julia Lab leads the JuliaParallel organization, which maintains the following projects:

DistributedArrays.jl: a native Julia distributed array implementation

MPI.jl: a wrapper for Message Passing Interface (MPI)

ClusterManagers.jl: Julia support for different job queue systems commonly used on compute clusters

Dagger.jl: a Dask-like framework for out-of-core and parallel computation

Elemental.jl: a wrapper for Elemental, a distributed linear algebra/optimization library developed by Prof. Jack Poulson

ScaLAPACK.jl: a wrapper for the Scalable Linear Algebra Package

HDFS.jl: a wrapper for the Hadoop Distributed FileSystem

Elly.jl: a client for Apache YARN

The Julia Lab also collaborates with Prof. Steven G. Johnson and Jared Crean in the development of PETSc.jl, a wrapper for the Portable, Extensible Toolkit for Scientific Computation.

People

Current Members

Prof. Alan Edelman (Principal Investigator)

Dr. Jeremy Kepner - Founding Sponsor (MIT Lincoln Laboratory)

Dr. Chris Rackauckas (Applied Math Instructor)

Prof. David P. Sanders (Visiting Professor)

Peter Ahrens (PhD Student)

Sung Woo Jeong (PhD Student)

Albert Gnadt (PhD Student)

Valentin Churavy (PhD Student)

Ranjan Anantharaman (PhD Student)

Shashi Gowda (PhD Student)

Cooper Sloan (M.Eng.)

Jerry Lingjie Mei (Undergraduate)

Adelaide (Addy) Chambers (Undergraduate)

Luana Lara (Undergraduate)

Lin Pease (Undergraduate)

Kelly Shen (Undergraduate)

Mark Wang (Undergraduate)

Collin Warner (Undergraduate)

Alumni

Prof. Ivan Slapničar (Visiting Professor, Fall 2014)

Dr. Jeff Bezanson (PhD Student, Fall 2009-Spring2015)

Dr. Matt Bauman (Visiting PhD Student, Fall 2015)

Stefan Karpinski (Data Scientist, Summer 2013-Fall 2014)

Jameson Nash (Undergraduate Student, Summer 2013)

Weijian Zhang (Visiting Student, Spring 2016)

Jake Bolewski (Research Engineer, Summer 2014-Spring 2016)

Oscar Blumberg (Visiting Masters Student, Spring-Summer 2015)

Keno Fischer (Undergraduate Student, Summer 2013 and Summer 2014)

Runpeng Liu (Undergraduate Student, Fall 2015)

Dr. Xianyi Zhang (Postdoctoral Associate, 2016)

Dr. Eka Palamadai (PhD Student, Fall 2011-Spring 2016)

Valentin Churavy (Visiting PhD Student, Summer-Fall 2016)

Tim Besard (Visiting PhD Student, Summer-Fall 2016)

David A. Gold (Visiting PhD Student, Summer 2016)

Jacob Higgins (Undergraduate, Summer 2016)

Dr. Andreas Noack (Postdoctoral Associate)

Dr. Jiahao Chen (Research Scientist, Fall 2013-Spring 2017)

Jarrett Revels (Research Engineer)

Dr. Deniz Yuret (Visiting Professor)

Collaborators

The Julia group is grateful for numerous collaborations at MIT and around the world:

Prof. Steven G. Johnson (MIT Mathematics)

Prof. Juan Pablo Vielma (MIT Sloan)

Dr. Vijay Ivaturi(University of Maryland, Baltimore)

Dr. Homer Reid (MIT Mathematics)

Dr. Alex Townsend (Cornell )

Dr. Jean Yang (MIT CSAIL alum, Harvard Medical School)

The JuliaOpt team team at the Operations Research Center

Simon Kornblith (MIT Brain and Cognitive Sciences)

Jon Malmaud (MIT Brain and Cognitive Sciences)

Spencer Russell (MIT Media Lab)

Chiyuan Zhang (MIT CSAIL), author of the popular Mocha.jl framework for deep learning

Zenna Tavares (MIT CSAIL) author of the Sigma.jl probabilistic programming environment

Sponsorship

We thank NSF, Amazon, DARPA XDATA, the Intel Science and Technology Center for Big Data, Saudi Aramco, the MIT Institute for Soldier Nanosystems, and NIH BD2K for their generous financial support.

The Julia Lab is a member of the bigdata@CSAIL MIT Big Data Initiative and gratefully acknowledges sponsorship from the MIT EECS SuperUROP Program and the MIT UROP Office for our talented undergraduate researchers.